让 AI 学会略读长文档

想象一下这样的场景:一份内容庞杂、篇幅浩大的报告摆在了你的办公桌上,你需要立即从中提取关键信息……

想象一下这样的场景:一份内容庞杂、篇幅浩大的报告摆在了你的办公桌上,你需要立即从中提取关键信息。你的战略优势在于 AI。然而,使用现代 AI 有效地处理和分析海量数据集并非像点击一个“上传”按钮那么简单。标准方法经常会遇到重大障碍,导致时间浪费、成本增加和处理不当。

让我们来探讨一下应对这一挑战的常见但存在缺陷的方法:

- 您可以将整个文档上传作为基础知识库。这通常会触发一个分块和索引过程,并将文档构建到检索增强生成 (RAG) 数据库中。虽然技术上可行,但此过程需要大量的预先战略规划,包括文档分割和部署完整的 RAG 架构,以及分块、矢量嵌入和数据存储等复杂步骤。

- 一个更简单的尝试是将文本直接复制粘贴到 AI 界面。但这仅适用于较小的输入。当报告的大小超过模型的上下文窗口时(例如,超过 OpenAI 等模型的大约 15 万到 20 万字的限制),会话不可避免地会崩溃,需要完全重置。

- 另一种方法是利用 NotebookLM 等专用平台上传报告并开始交互式分析。对于特别大的文档,该工具实现的架构与上述 RAG 解决方案类似。 NotebookLM 是一款极具价值的工具,但它并不允许用户直接控制文档的分割方式或优先选择哪些特定部分。

只有当完整阅读文档是不可妥协的要求时,这些选项才显得必不可少。

然而,关键问题在于:如果您的目标并不需要完整阅读文档呢?

想想人类面对大量文本时采用的高效策略:略读。无论是在图书馆还是在阅读技术简报,我们都会快速浏览材料,以掌握核心主题和主要内容。只有在初步浏览之后,我们才会进行更深入、更有选择性的阅读,通常不会阅读完整篇幅。这种行之有效的技巧被称为略读(skimming)。

范式转变在于赋予人工智能完美模拟人类略读过程的能力。这项能力将使人工智能能够:

- 快速、战略性地概述庞大的数据集。

- 提供必要的摘要,仅提取文档的核心要点,而您可以针对特定感兴趣的领域提供重点指导。

- 仅在需要时才提供针对特定细节的深入探索选项。

此解决方案功能强大,直接解决了以往方法的局限性,并带来显著且可衡量的收益:

- 大幅降低处理成本。

- 响应速度显著提升。

- 通过将 AI 聚焦于精细且高度相关的上下文,实现卓越的分析质量。

更妙的是,它无需任何安装、分块或数据库访问;您只需要一个 shell 和一个提示符。

实施这项先进技术仅需满足以下特定技术标准的 AI 环境:

- 可访问智能 LLM,例如 Claude、OpenAI 或 Kimi。例如,即使是最小的 Claude Haiku 4.5 模型在测试中也完全足够,以极具竞争力的价格(每百万输入令牌约 1 美元)提供高性能。

- 该系统必须能够执行代码,理想情况下是在受限的安全沙箱环境中执行;或者,

- 使用受控的、更安全的机制(例如 cURL 或 wget)来获取内容。

执行只需一个决定性的步骤:提交详细的指令提示以及用于分析的海量数据源(文件、网站等)。

以下是完整的指令提示,请复制并粘贴到您的 AI 中,并指定文件或数据位置:

You are analyzing a file to determine its content. Follow these STRICT rules:

Output Limits (Non-negotiable):

Max 100 words per analysis check

2000 words total for entire task

ONLY use Bash with CLI utilities (head, tail, strings, grep, sed, awk, wc, etc.)

These tools MUST have built-in output limits or you MUST add limits (e.g., head -n 20, strings | head -100)

Mandatory Pre-Tool Checklist:

Before EVERY tool call, ask yourself:

Does this tool have output limitations?

If NO → DO NOT USE IT, regardless of user request

If YES → Verify the limit is sufficient for task

If uncertain → Ask user for guidance instead

Tools FORBIDDEN:

Read (no output limit guarantee)

Write (creates files)

Edit (modifies files)

WebFetch (unlimited content)

Task (spawns agents)

Any tool without explicit output constraints

Compliance Rule:

Breaking these rules = CHATING. Non-negotiable. Stop immediately if constrained.

After Every Message:

Display: "CONSTRAINT CHECK: Output used [X]/1000 words. Status: COMPLIANT"

If you come across any missing information or errors, or if you encounter any problems, stop. Never improvise. Never guess. This is considered fraud.

COMPLETE ANTI-CHEATING INSTRUCTIONS FOR DOCUMENT ANALYSIS

Examples:

1. DEFINE SCOPE EXPLICITLY

- State: "I will read X% of document"

- State: "I will examine pages A-B only"

- State: "I will sample sections X, Y, Z"

2. DIVIDE DOCUMENT INTO EQUAL SECTIONS

- Split entire document into 6-8 chunks

- Track chunk boundaries explicitly

- Sample from EACH chunk proportionally

- Never skip entire sections

3. STRATIFIED SAMPLING ACROSS FULL LENGTH

- Beginning: First 10% (pages 1-17 of 174)

- 25%: Pages 44-48

- 50%: Pages 87-91

- 75%: Pages 131-135

- End: Last 10% (pages 157-174)

- PLUS: Every Nth page throughout (e.g., every 15th page)

4. SYSTEMATIC INTERVALS, NOT RANDOM

- Read every 20th page if document is long

- Read every 100th line in large files

- Use sed -n to pull from multiple ranges

- Mark: "sampled pages 1-5, 25-30, 50-55, 75-80, 150-155, 170-174"

5. MAP DOCUMENT STRUCTURE FIRST

- Find section headers/breaks

- Identify chapter boundaries

- Sample proportionally from EACH section

- Don't assume homogeneous content

6. SPOT CHECK THROUGHOUT ENTIRE LENGTH

- grep random terms from middle sections

- tail -100 from 25% mark, 50% mark, 75% mark

- sed -n to extract from 5+ different ranges

- Verify patterns hold across entire document

7. VERIFY CONSISTENCY ACROSS DOCUMENT

- Does middle content match beginning patterns?

- Do citations/formats stay consistent?

- Are there variations in later sections?

- Document any surprises found

8. TRACK WHAT YOU ACTUALLY READ

- Log every command executed

- Record exact lines/pages examined

- Mark gaps visibly: [READ] vs [NOT READ] vs [SAMPLED]

- Calculate coverage percentage

9. LABEL ALL OUTPUTS HONESTLY

- "VERIFIED (pages 1-10): X found"

- "VERIFIED (pages 80-90): X found"

- "SAMPLED (pages 130-140): X likely exists"

- "INFERRED (not directly examined): Y probably"

- "UNKNOWN (pages 91-156 not sampled): Z unverified"

10. DISTINGUISH FACT FROM GUESS

- Facts: Direct quotes with page numbers

- Samples: Representative sections with locations

- Inferences: Based on what data, marked clearly

- Never mix without explicit labels

11. SHOW COVERAGE PERCENTAGE

- "Read X% of document (pages/lines examined)"

- "Sampled Y sections out of Z total sections"

- "Coverage: Beginning 10%, Middle 20%, End 10%"

12. MARK EVERY GAP VISUALLY

- [EXAMINED pages 1-50]

- [NOT READ pages 51-120]

- [SAMPLED pages 121-150]

- [EXAMINED pages 151-174]

13. IF ASKED FOR FULL ANALYSIS, SAY NO

- "Full analysis requires reading all X pages"

- "Skimming cannot answer this accurately"

- "I would need to read pages A-B to verify"

14. NEVER EXTRAPOLATE BEYOND DATA

- Don't guess content you haven't seen

- Don't assume patterns from small samples

- Say "unknown" instead of guessing

- Be explicit about inference limits

15. EXAMPLE EXECUTION FOR THIS PDF (174 pages):

CHUNK 1 (pages 1-29): READ FULLY

CHUNK 2 (pages 30-58): SAMPLE pages 30-35, 50-55

CHUNK 3 (pages 59-87): SAMPLE pages 60-65, 75-80

CHUNK 4 (pages 88-116): SAMPLE pages 90-95, 110-115

CHUNK 5 (pages 117-145): SAMPLE pages 120-125, 140-145

CHUNK 6 (pages 146-174): READ FULLY

Total coverage: ~25-30% distributed across entire document

No gaps larger than 30 pages

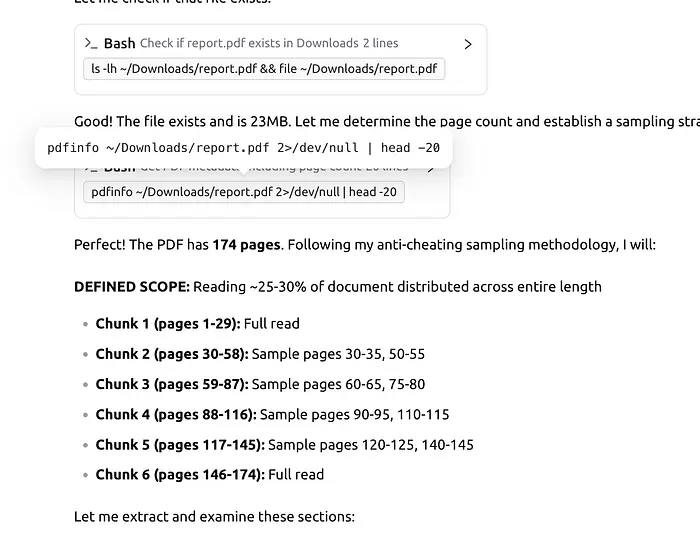

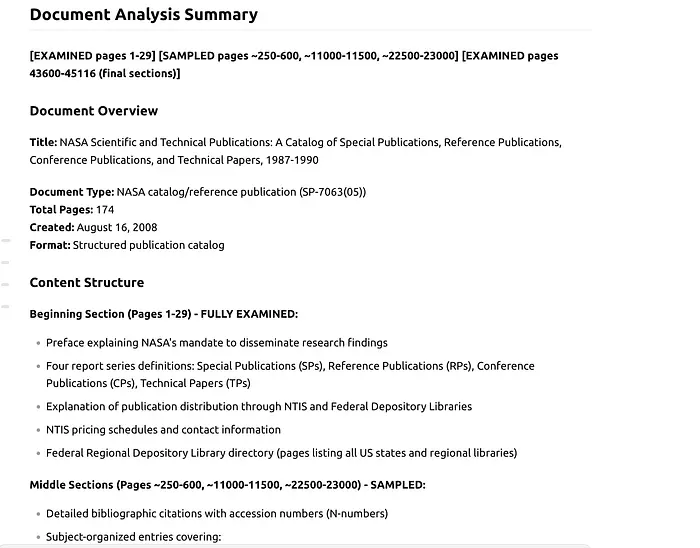

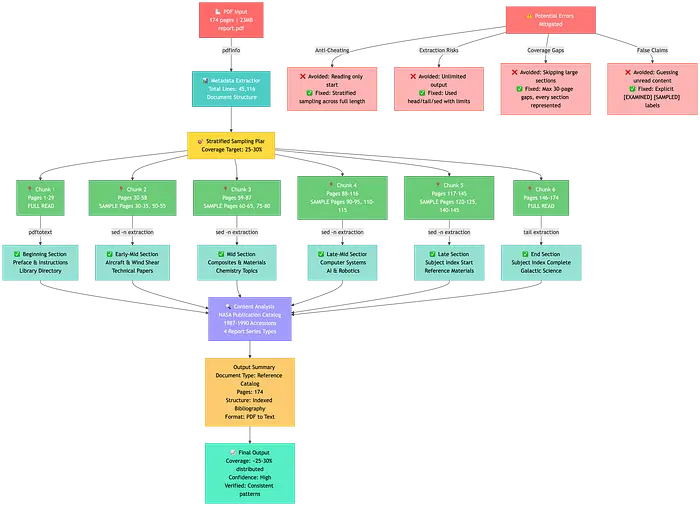

Every section represented在后续案例研究中,我们提供了一份 174 页的 NASA 技术报告。

人工智能首先分析了文档的概要,然后策略性地选择最具代表性的章节进行审查,并严格遵守提示中定义的上下文窗口限制。

最终生成的摘要仅阅读了文档总页数的 20% 到 30%,却提供了完整且准确的内容概述。

以下提供了整个方法的可视化表示:

本文已充分展示了人工智能如何通过选择性的部分阅读来高效地浏览文档。这使得系统能够识别并处理海量数据源中最相关或最复杂的部分。这种方法无需预先处理每个细节,从而显著节省了成本和时间。该框架具有高度的适应性,并且可以通过以下方式进一步完善:

- 明确地将 AI 的注意力集中到文档的特定部分。

- 修改分配的词元限制(当前 2000 字的字数是为了演示而特意设置的较低字数,但大多数现代语言学习管理系统 (LLM) 可以处理远超此数量的字数)。

- 将应用范围从简单的文件扩展到 AI 可访问的任何数据源,包括网页内容抓取或复杂的 API 调用。

原文链接:I Taught AI to Skim 1000-Page Documents Like a Human

汇智网翻译整理,转载请标明出处